Winter 2026: Exploring Gen-AI Tools in a Virtual Production Pipeline Video (SIGGRAPH SOIREE 2026)

Our SIGGRAPH SOIREE 2026 Fast-forward video “Exploring Gen-AI Tools in a Virtual Production Pipeline” shows how NCSU Media Arts, Design and Technology (MADTech) faculty and students applied generative AI tools in a real-world sponsored studio project last spring in collaboration with Los Angeles based VFX company Alecardo. Colombian-American founder Ricardo Tobon was awarded a virtual production fellowship by Mark Hoversten, Dean of the College of Design, and hosted by MADTech faculty Topher Maraffi for five days in our Virtual Production Lab. Tobon and his partner Alex Restrepo adapted the first South American Sci-fi novel, Las fuerzas extrañas (1908) by Leopoldo Lugones, to a five-minute virtual production narrative featuring a giant talking octopus with alien powers held captive in a jungle lab. With such a short production schedule and only a budget of $15K, Maraffi explored the use of AI tools to speed up each phase of design and reduce costs, while requiring that the quality of the VFX be suitable for a professional client.

As a MADTech representative on the college’s AI Task Force, Maraffi was testing AI tools for targeted applications in preproduction, production and postproduction in our lab. For preproduction, graduate students used Blockade Labs AI Skybox to generate a variety of equirectangular images based on Tobon’s concept art for the abandoned tropical lab. Maraffi then creatively upscaled the best ones using Magnific AI’s Mystic tool that re-styles upscaled images to make them more realistic and detailed. After Alecardo selected the background, we converted it to an HDRI Backdrop in Unreal Engine 5. Then in production we used RADiCAL Motion Live streaming to puppeteer the octopus rig by connecting it to a hidden MetaHuman in the UE5 scene. Maraffi used a single webcam to perform the creature and trigger virtual lights that indicated the emotional state of the character. The real-time control of the octopus gave the live actress playing the scientist role, performed by Restrepo, a virtual acting partner in the volume to react to while Khan shot cinematography for the climax.

In postproduction, we used AI to create VFX during the climax when the researcher is obliterated from a storm of cosmic particles unleashed by the angry alien octopus. We input source frames shot in the volume as start and end frames for a Disintegration effect in Higgsfield AI. This would have been a time consuming and expensive visual effect to do with standard CGI methods, but Higgsfield rendered an approved version with minimal iterations. This case study demonstrates the potential of gen-AI tools to enhance the virtual production pipeline, resulting in a short that has since won multiple awards at film festivals. Tobon spoke as keynote speaker at our 2025 MADTechfest, and he stated that in all his years of professionally working at industry studios like Sony, Dreamworks and Weta, he had never completed a project of this quality in such a short time frame. We believe Gen-AI tools contributed to the success of this project and are exploring these emerging tools further in other sponsored studios.

Watch the full 5-minute short film on YouTube…

Fall 2025: MADTech Virtual Production Demo Reel (ADN492-502)

NCSU MADTech faculty Topher Maraffi co-taught ADN492-502 Special Topics: Intro to Virtual Production in Fall 2025, with teaching faculty Jed Gant and technical support from College of Design Virtual Production Lab manager Ryan Khan. This demo reel shows selected student projects and excerpts from a sponsored studio with Visit Raleigh to create Esports tourism promo shots and a collaboration with Department of Performing Arts and Technology faculty Allison Beatty on a screen dance project. We also include some footage of shots done by our students for USA Baseball for the World E-Baseball Championships at NC State.

Fall 2025: MADTech ADN460 Creative Tech Studio Student Demo Reel

Demo reel of selected NCSU Media Arts, Design and Technology (MADTech) student work in Topher Maraffi’s ADN460 Creative Tech Studio 2 for fall 20025 semester. Students worked on creating digital doubles with Reality Scan and MetaHumans in Unreal Engine 5.6 and animated with performance capture using Rokoko and Noitom suits and MetaHuman Animator. They also designed games and environments in Fortnite Creative, Unreal Engine and UEFN.

Fall 2025: Historical Fiction Project Pre-Production

I am developing a historical fiction film project starring Samuel Clemens, Frederick Douglass, Charles Dickens, Harriet Tubman, Paschel Beverly Randolph, Olivia Langdon (Clemens), and other literary and political “rock stars” of the late nineteenth century. Based on real events and extraordinary people during the Reconstruction-era (1866-1900), including abolitionists, suffragists, technologists and even spiritualists, these short serial dramas will be both entertaining and educational. This will be a test to see if we can use emerging technologies like performance capture, generative AI and virtual production to ethically create storytelling media that has never been seen before; actor and artist driven animated performances that use AI to look and sound like believable historical figures come to life with personality and contemporary appeal. Here is an early AI test of Charles Dickens that begins to have this hyper-reality aesthetic using Hedra and ElevenLabs to alter my performance (I even like the little sleight-of-hand tricks Dickens is doing with his prompt copy, though object permanence is still an issue):

Fall 2025: MADTech AI Town Hall Meeting

We had a town hall meeting to discuss with students how faculty are integrating generative AI tools into our curriculum and hear their views and concerns about the technology. As a member of the Dean’s AI Task Force and Curriculum Committee, I spoke about how I am intentionally testing gen-AI tools for artistic control and ethical use in pre-vis for concept art, production of skybox backgrounds/suit-less mocap, and post-processing VFX. I showed some examples of unique work that incorporated some AI processing and a list of the AI tools I am testing for use in our production pipelines. I made it clear that AI use in my classes will be used to extend and enhance artist-created designs and not replace the design process by just typing text prompts. I pointed out ethically sourced tools like Moonvalley that were developed by filmmakers for artistic control, and that generative AI tools are already being integrated into our foundational software like Adobe Photoshop. Discussing the issues surrounding gen-AI with students and faculty has made me think more deeply about my own position that we need to engage with this emerging technology in a spirit of artistic and critical enquiry, and I plan on writing a formal argument for publication that gen-AI can and should be used for artistic practices in an ethical way.

Artists have always been technical, going all the way back to Renaissance painters like Leonardo Da Vinci, and digital technologies have increasingly simulated artistic techniques like chiaroscuro and perspective in digital media, such as in 3D games like Senua’s Saga: Hellblade 2 (by Ninja Theory, 2024). Gen-AI is the next iteration of this process where AI agents model artistic technique and style from data features in media artifacts. While it is important to discuss the ethical and licensing issues of training AI models on public works of art, it is equally important to consider that artistic style has never been copyrightable. I actively engaged in the “Photoshop of AI” debate back in 2012, and my UC Santa Cruz Computer Science Performatology research in Arnav Jhala’s Computational Cinematics Studio used machine learning to study mocap data of dancers for performing arts features. That work was an early indicator that a Photoshop of AI was likely coming, and now we have many competing Photoshops of AI and Adobe Photoshop with gen-AI. A recent example application was demonstrated by my former graduate student Leo Li, called “Meta AI” (2025), which featured a Leonardo AI MetaHuman in Unreal Engine using gen-AI software Convai, which could intelligently answer questions using Da Vinci’s notebooks as a knowledge base for performing embodied animation and dialogue.

Fall 2025: Coming Soon! Mitchelville and Dix Park Fortnite Experiences

We have submitted an Epic Games Megagrant to develop a Mitchelville educational experience in Fortnite featuring MetaHuman digital doubles of Gullah-Geechee community leaders, scholars, and elders telling the story of the heritage site using Unreal Editor for Fortnite (UEFN). We have also submitted a Megagrant in collaboration with NCSU Parks, Recreation and Tourism Management faculty Nathan Williams to develop a Fortnite digital twin of Dix Park using UEFN:

Fall 2025: Las Fuerzas Extrañas Won Best Sci-Fi Short at San Diego Movie Awards!

The Sci-Fi short film NCSU MADTech produced with Alecardo last semester in the College of Design Virtual Production Lab, Las Fuerzas Extrañas (Strange Forces, 2025), has received an Award of Excellence Best Sci-Fi Short at the San Diego Movie Awards hosted by the Museum of Photographic Arts! So far we have also been awarded Finalist at Manhattanhenge Film Festival in New York, an Honorable Mention from the Kraken International Film Festival in Milan Italy and Official Selections from Brooklyn SciFi Film Festival, Crossing Shibuya Japan AI Festival, and Seoul International AI Film Festival. The film is an adaptation of the first Latin American Sci-Fi novel by Leopoldo Lugones published in 1906 using the latest real-time and emerging technologies in our state-of-the-arts virtual production facility. Congratulations to Ricardo Tobon, Alex Restrepo, Ryan Khan, and my many MADTech students that helped us shoot the film for Dean Hoversten’s first Virtual Production Fellowship!

Fall 2025: Tribute: Metahuman to Gen-AI Class Demo-Test

Tribute is an NCSU MADTech Creative Tech Studio class demo project and MetaHuman to Gen-AI test using the master Shakespeare performance of Andy Serkis (Unreal/3Lateral 2018) as source material for showing how Unreal Engine 5.6.1 can use any video-audio to generate a MetaHuman facial animation. The unedited facial animation was then used to develop a custom Sci-Fi scene with my MetaHuman and voice using Eleven Labs voice cloning and post-render processing in Topaz Labs Astra to AI upscale the result. Higgsfield AI was used for the final explosion VFX. 360 HDRI Skydome background done with Blockade Labs AI Skybox. Spaceship model and particle VFX from FAB. Sounds by Freesound.org. I am experimenting with a variety of video to video AI applications to develop more believable MetaHuman animations through post-process upscaling. It’s all about retaining artistic control of the changes and expressiveness of the source performance while enhancing the visual aesthetics. Many of the gen-AI programs aren’t there yet, but this makes some progress towards that goal.

Summer 2025: Presented Metahuman Liveness at SIGGRAPH Spatial Storytelling Program in Vancouver

I presented “Metahuman Liveness: Performing Hyper-Reality Illusions in a Virtual Production Volume” at the inaugural SIGGRAPH 2025 Spatial Storytelling program in Vancouver. Here are my slides from the demo…

The latest Macbeth Metahuman project demo explores how to design conditional logics and state changes in Unreal Engine Blueprints for affording actor improvisation and enhancing liveness in a stage scene. I do this by giving the actor choices for creating interaction illusions with the MetaHuman characters in the LED wall by adding multiple trigger boxes in the scene for puppeteering different system responses with my gestures:

Depending on whether I go high or low with throwing some hot coals (LED lights) at one of the MetaHuman witches in the Macbeth scene, the system state changes to either Duck or Jump and creates the appropriate animation and VFX to create the illusion of an intelligent response…

To read more about my spatial design approach see my published Metahuman Liveness paper, and see the video demo below…

Summer 2025: Macbeth Metahuman Paper Published on HAL Open Science

The final version of my JIT2024 conference paper “Macbeth Metahuman: Performing Craig’s Über-Marionette in Hyper-Reality” was published on HAL Open Science and is available for download: https://hal.science/hal-05138650v1

Summer 2025: NCSU Design Life Article on MADTech Virtual Production Projects

A couple of our MADTech sponsored research projects were featured in this NCSU Design Life magazine article on the College of Design Virtual Production Lab: https://design.ncsu.edu/blog/2025/06/10/at-the-forefront-making-a-place-in-virtual-production/

Spring 2025: SIGGRAPH 2025 Immersive Pavilion Room Lead, Spatial Storytelling Acceptance, and Blog Post

After serving as the room lead for SIGGRAPH 2025 conference Immersive Pavilion jury, I am pleased to announce that my Macbeth Metahuman project demo has been accepted to the Spatial Storytelling program in Vancouver! Also, my SIGGRAPH 2024 Metahuman Theatre Educator Forum presentation on teaching mocap as a performing arts process was recently featured in the ACM SIGGRAPH Blog: https://blog.siggraph.org/2025/05/animating-the-self.html/

Spring 2025: MADTech Graduate Thesis Projects featuring Metahumans

Here are excerpts from three NCSU MADTech graduate thesis projects that explored Metahuman technology for real-time animation storytelling and interactive media experiences. Yasmeen by Fulbright scholar Sara Sherkyan shows an introspective reflection on confronting gender and cultural stereotypes in daily life through her Metahuman digital double. Meta AI by Leo Li reimagines Renaissance inventor and painter Leonardo Da Vinci as an interactive Metahuman that uses natural language and a generative AI knowledge database trained on Da Vinci’s notebooks to answer questions about Leonardo’s art and life. My Wish by Rebecca Pyun brings Korean “comfort woman” survivor Kim Hak Soon to life as a Metahuman using photogrammetry of her memorial statue and performance capture in a documentary format, highlighting the gender social justice issue from World War 2 that remains unresolved…

These three exceptional graduate thesis projects demonstrate the range of creative and educational applications of real-time Metahuman technology in Unreal Engine using photogrammetry, performance capture, real-time animation, and generative AI. I was a Chair and/or technical animation committee advisor for each of these student projects, and they all passed their MADTech Masters of Art & Design thesis defenses with flying colors. Some software used: Epic Games Unreal Engine 5, Metahuman Creator and Animator, Reality Capture, Noitom Perception Neuron Studio, Livelink Face, Convai, Sona AI, Runway AI, and Adobe Premiere Pro.

Spring 2025: Ricardo Tobon Keynote Speaker for MADTechFest 2025

Hollywood-based VFX artist Ricardo Tobon of Alecardo is our Keynote Speaker at NCSU MADTechFest today. He was our first NC State College of Design Virtual Production Fellowship recipient and will be speaking about our collaboration on shooting “Las Fuerzas Extrañas”, a short film adapted from the 1906 story by Leopoldo Lugones, and showing a first cut of the film. Here is behind-the-scenes footage from the four days we developed and shot the scene in our Virtual Production Lab. We used Epic Games Unreal Engine 5.5 and FAB for worldbuilding RADiCAL Motion AI mocap for real-time creature control, and Blockade Labs AI Skybox and Magnific AI for background generation. My ADN460 Creative Tech 2 undergrad students and ADN561 Grad Hyper-Reality Studio students helped as production assistants during two days of testing and shooting. Ricardo’s Keynote will be Friday 04/25 at 1pm in Brooks 320 on the NCSU main campus.

Spring 2025: Alecardo Professional Virtual Production Shoot with NCSU MADTech

In April we hosted Los Angeles-based VFX artist Ricardo Tobon and Alex Restrepo of Alecardo at NCSU to shoot a professional Sci-Fi/Horror short film in our LED volume funded by the Dean’s Virtual Production Fellowship in the College of Design at NCSU. I have known Ricardo for twenty years since we were both faculty at Full Sail University in the 2000s, and he has since worked on blockbuster movies and AAA games in Hollywood. We shot in our studio over four days with help from MADTech students and faculty, culminating in a guest lecture by Ricardo on virtual production for my ADN561 Hyper-Reality Grad Studio course. MADTech faculty and students also helped develop the virtual and physical sets, digital and practical VFX, real-time AI mocap creature controls and 3D printed props. Some software programs we used were Unreal Engine 5.5 with Livelink, Radical AI motion capture, Blockade Labs AI Skybox, and Magnific AI. The narrative was based on the first Latin-American Sci-Fi novel, Las Fuerzas Extrañas by Leopoldo Lugones published in 1906, and which features a giant talking octopus we performed in real-time as a digital super-puppet using Radical AI Livelink plugin in UE5. Ricardo will also be our MADTechFest keynote speaker on April 25th 2025. Big thanks to Ricardo and Alex for collaborating on this project with us, to our amazing cinematographer and virtual production lab manager Ryan Khan, and to my ADN460 and ADN561 students for helping to make the movie magic.

Spring Break 2025: Mitchelville Research Trip

In March I took ten students and two faculty to Beaufort County to gather spatial data at Historic Mitchelville Freedom Park (HMFP) on Hilton Head Island. The students received Global Experience credits by learning about Gullah-Geechee culture through Gullah Heritage tours on Hilton Head and a Reconstruction Era National Historical Park tour in Beaufort. We photogrammetry scanned and performance captured the Director of HMFP Ahmad Ward, award winning performer Marlena Smalls (left), and Gullah Days author Carolyn Grant (right):

As part of their final deliverables, students designed individual research posters about their experience…

Students first learned how to create and animate MetaHumans of themselves using photogrammetry and performance capture in Unreal Engine 5.4 before working on data recorded at Mitchelville:

Here are some places we visited in Beaufort County over Spring Break…

Spring 2025 MADTech Guest Speaker: Curtis Hickman, Award-winning Immersive Experience Designer & Author Virtually Returns to NCSU

When: Thursday March 6th at 12:00pm Noon (pizza and drinks provided).

Where: Virtual Production Lab (Brooks 320) over Zoom

Speaker Bio: Professional illusionist, VFX artist, and co-founder of the VOID VR Theaters, Curtis Hickman created some of the most advanced location-based VR experiences with legendary IP from Disney and Sony like Star Wars, Ghostbusters and Avengers. For the third year in a row, Hickman, author of the critically acclaimed book Hyper-Reality: The Art of Designing Impossible Experiences (2023) and creator of ChatGPT’s Immersive Experience Designer tool, will speak to MADTech students and faculty over Zoom in our College of Design Virtual Production Lab in Brooks 320. Everyone in the College of Design is welcome to join us for pizza and some stimulating discussion about making extended reality experiences more immersive through illusionism.

Or join us from anywhere through Zoom: https://ncsu.zoom.us/s/96459781774

For more info on Curtis Hickman, see his website…

Winter 2025: New Conference Paper! MACBETH METAHUMAN: PERFORMING CRAIG’S ÜBER-MARIONETTE IN HYPER-REALITY

Abstract: An exciting application for virtual production is where actors appear to perform with life-sized digital doubles in a large LED wall for a location-based experience like a live show. The Macbeth Metahuman Theatre project combines Edward Gordon Craig’s über-marionette or super-puppet concept with Curtis Hickman’s hyper-reality design framework to create the illusion of intelligent actor-Metahuman interaction in a virtual production volume. Applying Craig’s critique of theatre acting and descriptions of an über-marionette to digital doubles, I develop practical criteria for super-puppets in immersive experiences. Then I discuss the initial results of adapting the opening scene of Shakespeare’s Macbeth for a hyper-reality treatment in a virtual production volume. Stage magic principles are used to hide the control mechanism for changing states in the Unreal Engine scene, so that a live actor wearing an inertial motion capture suit under their costume can puppeteer a hidden Metahuman double that hits invisible trigger boxes in the volume, driving the dramatic spectacle through special effects and animated Metahuman witches. I argue that this project fulfills the criteria for Craig’s concept of a super-puppet in his Theatre of the Future, and that this expanded virtual production pipeline offers live actors a new venue of creative expression as performance capture artists.

Download full paper published in JIT 2024 Computer Theatre Conf. proceedings on HAL Open Science…

Winter 2025: ADN311-502 Creative Coding in Games Demo Reel 2024

A selection of student game demos in my Creative Coding course using the MDA game design framework, concepts like dynamic Flow and narrative immersion, and developed mechanics in Fortnite Creative, Unreal Engine Blueprints, and Unity C# scripting…

Winter 2025: ADN460 Metahuman XR Project Highlights Reel 2024

NCSU MADTech student demo reel of project highlights from ADN460 Creative Tech Studio 2 that I taught in Fall 2024 semester. My students created Metahuman digital double animations with photogrammetry in Reality Capture and Metahuman Creator. They animated their Metahumans with performance capture using Rokoko and Noitom suits and headrig with Metahuman Animator. Animation were rendered in Unreal Engine 5.4. XR group projects were done with Metahumans in UE5.4 using Tilt 5 AR and Meta Quest VR…

Winter 2025: Presenting “Hyper-Reality Research in a Virtual Production Volume” at SIGGRAPH SOIREE 2025

I will be presenting my SIGGRAPH SOIREE 2025 Fast-forward Video “Hyper-Reality Research in a Virtual Production Volume” on January 31st 2025. The demo shows Actor-Metahuman interaction research we are doing in the NC State College of Design Virtual Production Lab in Fall 2024 for the Macbeth Metahuman project:

Fall 2024: Presenting “Macbeth Metahuman: Performing Craig’s Uber-Marionette in Hyper-Reality” at JIT2024 in Avignon

I will be presenting my Metahuman theatre projects in the proceedings of Journées d’Informatique Théâtrale (JIT Computer Theatre Conference), 3rd Edition, in Avignon France from October 9th-11th 2024. Click here to read the extended abstract...

Summer 2024: SIGGRAPH’24 Paper “Level-Up Logics”

I presented the paper “Level-Up Logics: Leveraging Three Game Design Platforms to Teach Coding” in the Educator’s Forum proceedings during SIGGRAPH’24 in Denver last July.

Abstract: Coding has become an integral tool for designers, but the logical structures of programming can be intimidating for many students in the arts. To make learning the fundamentals of coding less stressful and more appealing, I revamped a Creative Coding elective in our Media Arts, Design and Technology (MADTech) program to progress students through three increasingly technical game design platforms. Students were taught how to apply the player-centric MDA (Mechanics, Dynamics, Aesthetics) game design framework to Fortnite Creative, a popular 3D platform that doesn’t require any coding but instead has students configure devices in the game world to implement logical interaction. Students used a library of game assets to build a complex prototype that required collectibles to open a door and progress in the game. These core game mechanics were carried through to similar projects in Unreal Engine and Unity, while the MDA framework enabled students to creatively vary aesthetics and dynamics to make each game look and feel unique. By applying the same logical structures in three platforms, progressing from graphical node-based Blueprints to C# scripting, students gained a better grasp of fundamental coding concepts and showed a preference for scripting by the end of the semester. Though this curriculum was primarily targeted at students in the College of Design, students from more technical backgrounds like Computer Science were still challenged by the more aesthetic features of this design-centric approach to game development.

Click here for a PDF of my presentation slides…

Click here to download the published paper…

Summer 2024: SIGGRAPH’24 Paper “Metahuman Theatre”

I presented the short paper “Metahuman Theatre: Teaching Photogrammetry and Mocap as a Performing Arts Process” in the Educator’s Forum proceedings during SIGGRAPH’24 in Denver last July.

Abstract: I have been designing a real-time Metahuman animation pipeline in our Media Arts, Design and Technology (MADTech) program at NCSU that emphasizes motion capture as a performing arts process to create dramatic scenes with digital doubles. Students developed a 3D self-portrait using photogrammetry in Epic Games Reality Capture software and Mesh to Metahuman plugin in Unreal Engine, and then performed their double using our inertial mocap suits and control rigs in Autodesk Maya and UE5 sequencer. Facial performance was captured using real-time software like Epic’s Livelink Face app and Metahuman Animator, completing a virtual production process that emphasized embodied acting and improvisation over scripts and storyboarding. By framing the Metahuman as a performing object like a puppet and adapting design frameworks like Disney’s 12 Principles of Animation to performance capture, students learned a technical animation process in the context of a performing arts approach. This produced aesthetics that are different and arguably more performative than the traditional keyframed approach taught in many animation programs.

Click here for a PDF of my presentation slides…

Click here to download the published paper…

Summer 2024: Metahuman Interpretive Scene Test for Mitchelville’s Ghosted Structures

We have signed a course agreement with Historic Mitchelville Freedom Park (HMFP) on Hilton Head Island SC to begin developing interpretive scenes for their new “ghosted structures” and buildings funded by Boeing, the Mellon Foundation and the State of South Carolina. I travelled to HMFP to gather volumetric data and interview Gullah elders for an interpretive scene test using Metahumans and Unreal Engine 5.4. Over Maymester, I processed the photogrammetry and performance capture data with two MADTech undergraduates and MAD graduate TA, and here is the resulting scene with Ahmad Ward’s Metahuman introducing Mitchelville and excerpts from two Gullah elder interviews:

Here is a poster we will be presenting at the 2024 Undergraduate Research Symposium:

Summer 2024: MAD Graduate Poster on Hyper-Reality as the Future of Computer Graphics and Interactive Techniques

Rebecca Pyun, a talented graduate student in our Masters of Art and Design (MAD) program, created this poster on her Korean cultural heritage research projects in my Creative Coding and Graduate Studio 2 Hyper-Reality courses last academic year. This poster was submitted to the SIGGRAPH Spacetime poster competition that had the prompt “The future of computer graphics and interactive techniques…” (You can see her game projects on our Creative Coding reel and her hyper-reality project on our Metahuman highlight reel):

Summer 2024: MADTech Metahuman Highlight Reel 2023-24

Highlight reel of all the exceptional Metahuman student work done in my NCSU MADTech courses over the 2023-24 academic year. These real-time animated shorts were produced by applying arts principles to a technical pipeline that included photogrammetry and performance capture:

Spring 2024: Student Metahuman Monologues

I began teaching NCSU MADTech students the Metahuman Animator pipeline in my Special Topics: Motion Capture and Advanced Animation course (ADN492/592), which involved face capture with an iPhone and then developing the performance in Unreal Engine 5.2 for custom Metahuman characters using keyframing on a control rig. Fourteen students were taught how to apply Disney’s animation principles and performing arts techniques to motion capture in our virtual production studio, and then how to develop their real-time scenes in UE5’s sequencer for cinematic rendering. The resulting Metahuman Monologues have a liveness and personality that comes directly from the student’s embodied performance, with improvised expressions that would not be likely in a traditional keyframing process:

Fall 2023: Student Metahuman Cinematics for Creative Tech Studio 2

MADTech students in my Creative Technology Studio 2 course (ADN460) created Metahuman cinematics using photogrammetry and performance capture. Hardware/software they used were Unreal Engine 5.2, Reality Capture, Autodesk Maya, Quixel Megascans, Blockade Labs AI Skybox, Noitom Perception Neuron Studio and Livelink Face. Here are a couple of the best by Laura Reeve and Maleah Seaman:

Fall 2023: Creative Coding Student Game Projects

NCSU MADTech student game design reel for my Creative Coding course (ADN311/502). Students were introduced to three coding platforms: Fortnite Creative, Unreal Engine Blueprints, and Unity C# scripting. They applied game design concepts like MDA, Flow and Narrative Immersion to create similar 3D games in all three platforms, and then designed a research poster to summarize their design process and choices.

2023 NCSU MADTech Open House: Live Mocap Performance with our LED Wall

I performed my Metahuman double for a live audience in a Halloween-themed theatrical performance and mocap demo at our NCSU MADTech Open House in October. This recording shows me in our Noitom motion capture suit performing in front of our LED wall with an Unreal game engine scene that triggers dancing NPC zombies and visual effects like virtual explosions and fire.

2022-23: NCSU MADTech Metahuman UE5 Demo & Projects

Metahuman-Topher was born dancing on a virtual beach! Some stills from a demo project for my NCSU ADN-460 course on using game engine technologies to create a hyper-real self portrait for Unreal Engine 5 cinematics.

I taught students how to use Reality Capture to photogrammetry their head, so that they can make a Metahuman of themselves in UE5. They then exported the model to Autodesk Maya for animation using mocap from our Noitom suit, and created custom clothes in Marvelous Designer. The final animation is rendered in a UE5 themed environment that they build using Quixel Megascans.

Here is a video of my class demo and some of the final student projects for fall and spring:

……

Fall 2022: NCSU Media Arts, Design and Technology (MADTech) AR Projects in Unity

Undergraduate students in my ADN 460 Interactive Media studio course created augmented reality projects using Vuforia AR in Unity game engine, with animation assets created in Steam Fuse, Mixamo, and Autodesk Maya:

……

Fall 2022: NCSU VR Ethics Book Chapter Talk

November 17th 2022, I gave a lecture on “The Ethics of Playing Black Bodies in VR” based on my recent book chapter in The Changing Face of VR (Vernon Press, 2022). This was part of the NCSU Art & Design Fall Lecture Series titled Emergence. Here are my slides and a Zoom recording of the talk:

……

Summer 2022: New Book Chapter “VR Storytelling for Social Justice and the Ethics of Playing Black Bodies”

July 22nd 2022, I have a chapter in the new peer-reviewed book The changing face of VR: Pushing the boundaries of experience across multiple industries, edited by Dr Jordan Frith and Dr Michael Saker (Vernon Press, 2022):

Abstract: Using Augusto Boal’s Theatre of the Oppressed role play principles of a “spectactor” and joker figure as a performative lens, I analyse VR storytelling experiences by artists of colour that confront social justice issues and educate on Civil Rights history using a variety of platforms from 360 video to room-scale VR installations. I argue that narrative framing to cast a spect-actor into the body illusion of a protagonist of colour can be done ethically, and role play with NPCs designed as joker figures representing the oppressed community can promote empathic understanding.

Keywords: Ethical VR, Experiential Storytelling, Serious Play, Empathy Machine

Read an abbreviated sample of the chapter…

Errata (Chpt 2, Pg 28, Para 1, Sent 3): “Performing an avatar role focuses player attention on the character’s experience and can lead to investing in their relationships with others in the scene, which helps to prevent ego-oriented distractions and both immersion and flow for character actors (Maraffi, 2021).” Should be: “Performing an avatar role focuses player attention on the character’s experience and can lead to investing in their relationships with others in the scene, which helps to prevent ego-oriented distractions, and encourages both immersion and flow for character actors (Maraffi, 2021).

Summer 2022: USCB-NEH Reconstruction-era Institute Presentation “Mitchelville XR Tour”

July 14th 2022, I presented a talk and slides named Using AI and 3D Tools to Visualize History, which featured design work on our NEH-funded Mitchelville XR Tour research project.

Summer 2022: DIGRA 2022 Conference Paper “Multimodal Framework for Enhancing RPG Playfulness through Avatar Acting Affordances”

July 9th 2022, I presented a peer-reviewed extended abstract of my work-in-progress SGRplay framework for analyzing RPGs and XR storytelling experiences to the 2022 Digital Games Research Association conference proceedings. Here are a PDF of the slides for my talk.

Summer 2021: Cinergie Journal Article “Stage Magic as a Performative Design Principle for VR Storytelling”

August 4th 2021, I published an article in a special VR Storytelling issue of the international journal Cinergie:

Abstract: This article examines The VOID’s Star Wars: Secrets of the Empire (2017) VR arcade attraction, and analyzes the intermedial magic principles employed by co-founder and magician Curtis Hickman to create the illusion of a fictive world with impossible space and liveness. I argue that The VOID (Vision of Infinite Dimensions) functioned like nineteenth century magic theaters run by Georges Méliès and others, by employing magic principles of misdirection that directed player attention towards the aesthetics of an illusion, and away from the mechanics of the effects generating technology. Narrative framing and performative role play transported multiple players into a believable Star Wars immersive experience, creating an aesthetics of the impossible that reflected the goal of many stage magic tricks, and was foundational to trick films in the cinema of attractions of the early twentieth century. Using game studies concepts like Huizinga’s magic circle and theatre arts concepts like Craig’s über-marionette, this article suggests that The VOID and other stage magic approaches to VR, like Derren Brown’s Ghost Train (2017), are a new medium for participatory theatre that incorporate immersive features from both cinema and games.

Keywords: VR Magic Circle; Impossible Aesthetics; Immersion; Space in VR; Liveness.

Citation: Maraffi, C. (2021). Stage Magic as a Performative Design Principle for VR Storytelling. Cinergie – Il Cinema E Le Altre Arti, 10(19), 93–104. https://doi.org/10.6092/issn.2280-9481/12234

Download the full article from Cinergie…

Fall 2020: Artificial Creativity 2020 Conference Presentation

Nov 19th 2020, Before popular generative AI art tools like Midjourney there was a GAN-based online tool called Art Breeder that was used to “breed” images with “gene” features. This 2020 Artificial Creativity Conference (hosted by Malmo University, Sweden) presentation titled “Sherlock Frankenstein: Transmedia Character Design with AI Breeding Tools” talks about previous work using Art Breeder to generate preproduction character designs that incorporate human actor style features and touches on some of the ethical implications:

Fall 2020: Mitchelville and MODS projects Featured in FAU Research Magazine

Our National Endowment for the Humanities and Epic Games Megagrant funded research project Mitchelville AR Tour, as well as our Prehistoric Florida project for the Museum of Discovery and Science, were featured in FAU’s Owl Research & Innovation Fall 2020 issue, on pages 19-21:

Spring 2020: Ethical XR Symposium

On Feb 21st we hosted an Ethical XR Symposium with SCMS Multimedia Studies faculty at Living Room Theaters on the FAU Boca Raton campus. Magic Leap participated in the demos with MTEn MFA students, and talks were given by media scholars from around the country, including Harvard, NYU, and Columbia (photos by Topher Maraffi and Nicole Morse):

Spring 2020: Research Encounter Event at FAU Boca Campus

FAU Media, Technology, and Entertainment students in my XR Immersive Design course showed their research project prototypes at Research Encounter 2020 on Feb 12th. Hosted by the FAU Division of Research, we conducted demos of several projects on the Magic Leap and Oculus Quest headsets. FAU President John Kelly stopped by to see what we have been working on, and there were many attendees from the university and Boca community (photos by Brandon Martinez and Topher Maraffi):

Summer 2019: FAU-MODS Apptitude AR Course

FAU SCMS and Computer Engineering faculty taught a STEAM (STEM+Art) summer school course for high school students that focused on designing and developing augmented reality (AR) apps for research posters. This course is part of the Fort Lauderdale Museum of Discovery and Science (MODS) Apptitude internship program, and runs for three weeks in the summer at FAU Boca campus. Students learned how to create an AR app in Unity game engine that displayed green-screen video and animated 3D models on their research posters for museum exhibit topics.

Students working in class and the Freshwater habitat group poster:

A video of MODS project demos (by grad TA Richie Christian) and final App-titude AR summer school poster presentations:

Spring 2019: FAU MTEn Grad Research Day Posters

Media, Technology, and Entertainment (MTEn) MFA students in my Interactive Interface Design (DIG6605) course presented research posters at the 10th annual FAU Graduate and Professional Student Association (GPSA) Research Day. Pictured are clockwise: Raven, Monisha, and John Horvath: Alex Henry; Monisha Selvaraj; Camilo Morales; Brandon Martinez and Alberto Alvarez; Alex; Raven Doyle.

Spring 2019: FAU MTEn-XR Lab Open House

We had an open house in our Media, Technology, and Entertainment (MTEn) Extended Reality (XR) lab for potential MFA students. We demo’d our Magic Leap, Oculus Rift, HTC Vive, and Noitom Perception Neuron mocap technology:

Spring 2018: USCB Film & Digital Media Symposium

We had the second USCB Film and Digital Media Symposium in partnership with the Beaufort International Film Festival, with a Sea Island Center gallery reception, lightning round talks from USCB faculty and BIFF filmmakers, and a keynote talk by The Simpson’s director Mark Kirkland.

“Hopscotch Joiner” (55”x24”), Digital Imaging course compositing demo of applying David Hockney’s joiner compositing technique using digital media to create a seamless panorama scene, Spring 2018.

“Self Portrait” (32”x24”), Digital composite print on canvas, and then layered with acrylic paint, Spring 2018.

Fall 2017: USCB Faculty Exhibition

In November, see the latest artworks by USCB Fine Arts faculty at Sea Island Center gallery on the historic Beaufort campus. Here are some pictures of our opening reception on Thursday November 2nd. The show featured two of my mixed-media canvases and digital imaging studies, as well as a poster and teaser video on my documentary project.

Artist Statement

This year I am showing three creative works and related practice-based media arts research that began as class demonstrations in my 2017 USCB courses.

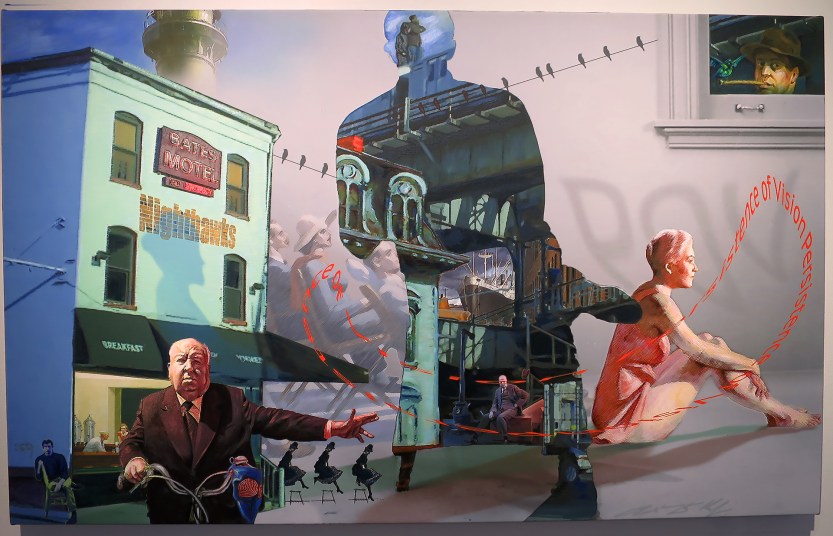

“Persistence of Vision: Coasting Ahead, Hitch Throws Hopper the POV Sign” (42”x26”), Media Design digital compositing demo with samples of Alfred Hitchcock and Edward Hopper imagery, printed on canvas and layered with acrylic paint glazes, Fall 2017.

The piece displayed in the main gallery entitled Persistence of Vision: Coasting Ahead, Hitch Throws Hopper the POV Sign was started as a compositing demo in Adobe Photoshop for my Fall 2017 MART B102 Media Design course. The POV theme refers to the nineteenth century concept of the same name that gave birth to cinema. The term described the visual phenomenon that a sequence of images played at a sufficiently fast rate creates the illusion of continuous motion. More conceptually, POV is being used to reference the evolution of a visual aesthetic that was first introduced by Renaissance painters experimenting with optical devices, evolving to the techniques used in chemical photography and cinematography, and very much employed by digital artists today. The compositing process I demonstrate in my course uses the same techniques Renaissance painters first developed in the Fifteenth century; staging a scenic narrative from a variety of image sources, modeling the curvature of forms with high contrast lighting, and using perspective lines to create the illusion of depth.

In addition to exploring concepts related to the POV theme, students were required to research at least one cinema artists and one fine arts painter that relate to each other, such as Alfred Hitchcock and Edward Hopper, and then digitally compose an image that incorporated visual and style references from both. My demo piece referenced how Hopper was inspired by early cinema, often going to the movies for weeks at a time, and how Hitchcock staged scenes that echoed the aesthetics of a Hopper painting. Source elements were significantly processed through digital filters to create a unique aesthetic, and the Hunting Island lighthouse was incorporated to relate the scene to Beaufort. Then I printed the digital composite on canvas so that I could hand paint acrylic glazes onto the surface, adding a final layer of texture and color depth to the piece.

“Lab Monitor” (36”x28”), digital “joiner” collage printed on canvas and enhanced with ink, colored pencil, and acrylic paint, Summer 2017.

The mixed-media piece in the hallway entitled Lab Monitor, with related studies and research poster, was also started as a class demo inside of Adobe Photoshop. However, the work went through a more complex process of alternating between digital and physical media in my MART B145 Digital Imaging course. We initially used digital photography to crowd-source images of a scene in our lab where I did a simple action of rotating in my chair. Students used the photos to explore David Hockney’s “joiner” collage technique, where photos are treated like brush strokes to create a Cubist representation of motion in time and space, but inside of Photoshop. This allowed us to process source photography through paint filters to make them more compatible with physical media, and then to print the final result on matte paper to enhance with ink, colored pencils, and paint. The next stage of the project involved using the prints to create texture maps in Blender 3D software and model a digital representation of the original scene. We then imported the scene into Unity game engine to make a “joiner” game where we could interactively create Hockney style collages through a photography play metaphor.

“Study for Lab Monitor” (16″x20″), digital animated “joiner” in Blender 3D software.

I composited several of these game-generated collages to create the final image that was printed on canvas, and then layered with physical media to add the hand of the artist back into the work.

“Jumpin’: SC Roots of Swing Dance Documentary Teaser” (30” Mac), Digital video loop, Summer-Fall 2017.

The Jumpin’: SC Roots of Swing Dance video teaser and research poster shows work being done related to my MART B250 Broadcast Design course. This project involves using the latest digital filmmaking techniques, including visual effects and motion graphics, to create a PBS-style feature documentary on how swing dancing in the early twentieth century originated from the Gullah-Geechee culture in Beaufort County. The pre-production research displayed in this work was conducted over summer and fall 2017 with a Sea Island Institute grant, in partnership with SCETV, including storyline and visual development for motion graphics, backgrounds for green-screen interviews, 2.5D visual effects, and lower-third graphic designs.

“Research Poster for Jumpin’ Documentary” (48”x36”), Digital print on paper, Summer-Fall 2017.

Categories: Event