Media, Technology, and Entertainment MFA (MTEn-MFA) Lab Courses & XR Research Projects at Florida Atlantic University

Multimedia courses I teach at FAU focus on game design, virtual production, and emerging technologies like the latest extended reality (XR) headsets. MTEn-MFA courses include Preproduction, Prototyping and Previsualization (DIG6546), 3D Production for Interactivity (DIG6547), and XR Immersive Design (DIG6605). My grad courses are often cross-listed with my undergrad Film, Video, and New Media BA courses, such as Visual Design for Film, Animation, and Games (DIG4122C), Advanced 3D Computer Animation (DIG3306C), 3D Video Game Design (DIG3725C). I also teach a Video Game Studies (DIG4713) course in the Spring semester. Note! Our MFA and BA programs are moving to the downtown Fort Lauderdale campus over the 2020-2021 academic year, but all of my courses will be held online because of Covid19 in Fall 2020.

Projects, Courses, and Events

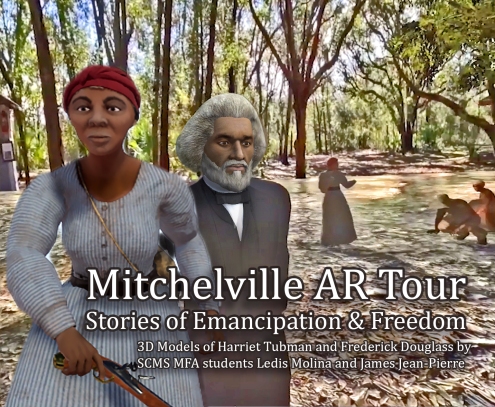

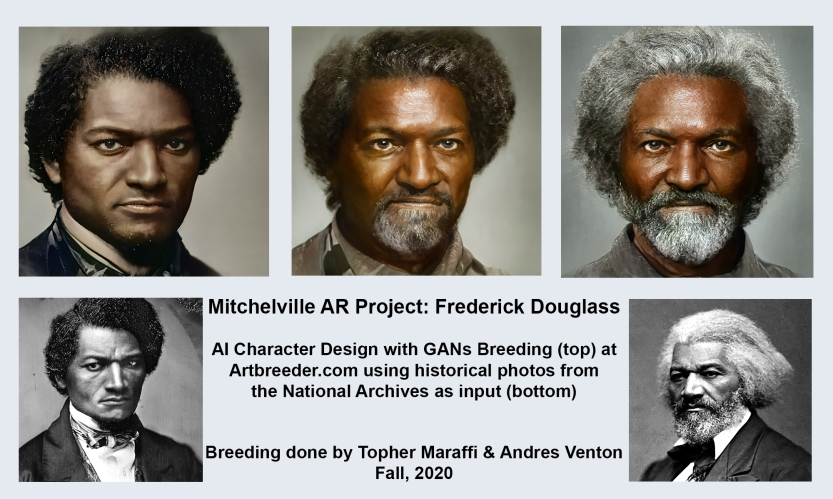

Mitchelville AR Tour Funded Research Project

2019-2020 This project was awarded a National Endowment for the Humanities (NEH) Digital Projects for the Public “Discovery” grant, an Epic Games Megagrant, and a Walter & Lalita Janke Emerging Technologies Fund seed grant to complete preproduction design work on an augmented reality (AR) tour of Historic Mitchelville Freedom Park on Hilton Head Island SC. Mitchelville was the first Freedman’s town in the US during the Civil War, and a Gullah-Geechee heritage site today. We will be creating a 360 experience of the story of America’s first efforts at Civil Rights for African Americans during the Reconstruction Era as it relates to the Port Royal Experiment, with life-sized historical figures like Harriet Tubman, who will be experienced on site with Magic Leap headsets and mobile phones.

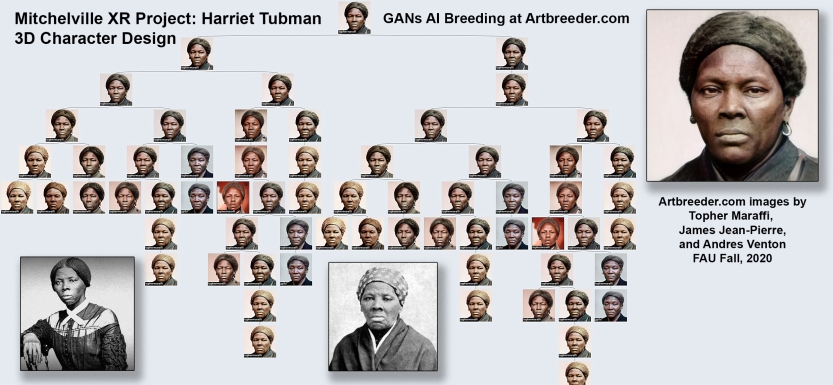

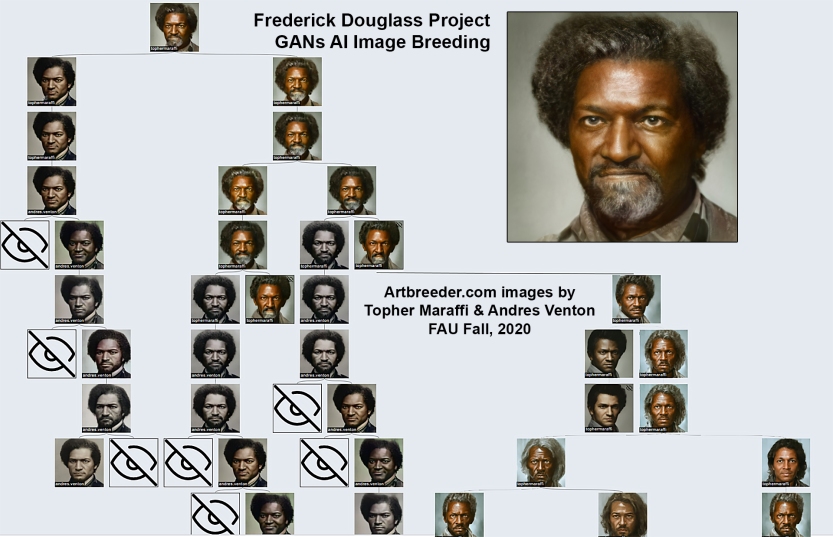

New! Fall 2020 we began using Generative Adversarial Networks (GANs) and neuro-evolutionary AI breeding tools to design more realistic 3D versions of historical characters like Harriet Tubman and Frederick Douglass. This work is part of a new AI-3D-XR pipeline that we are developing for the Mitchelville project prototyping phase:

*Click here to go to the Mitchelville AR Tour Project Page…

________________________________________________________________

Museum Research & Pedagogy to Enhance Exhibits With XR

We have submitted an IMLS grant to expand our summer App-titude collaboration with Fort Lauderdale Museum of Discovery and Science (MODS) to do year-round research and pedagogy on using XR to enhance museum exhibits. Over three weeks in the summer we taught App-titude high-school interns how to create AR enhanced research posters on STEM topics featured in MODS exhibits.

2019-2020 graduate student Richie Christian started pre-production on a Prehistoric Florida AR project in my 3D Production for Interactivity (DIG 6547) MFA course, and then created a VR simulation of the AR app using 360 imagery of MODS and lip syncing on a virtual 3D docent. Here is a video of the preproduction designs and VR test:

Some more realistic virtual docent designs using our AI to 3D character development pipeline…

________________________________________________________________

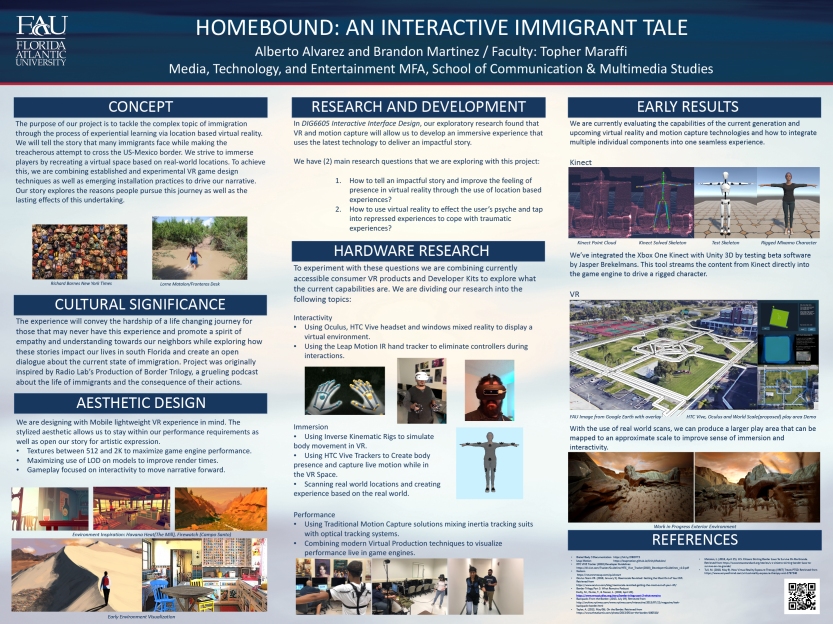

Homebound VR MFA Thesis Project

Spring 2020 Media, Technology, and Entertainment graduate students Alberto Alvarez and Brandon Martinez successfully defended their MFA thesis Homebound: An Immersive Immigrant Tale, which featured an interactive narrative where the player determines the fate of an undocumented immigrant in US Border Patrol custody:

Spring 2019 Brandon Martinez and Alberto Alvarez created the initial designs and prototype for their VR thesis project in my spring 2019 Interactive Interface Design (DIG6605) course. They showed their research poster at FAU Graduate and Professional Student Association (GPSA) Research Day (click to enlarge details):

Some image samples from their design document:

________________________________________________________________

Dhayam VR MFA Thesis Project

Spring 2020 Media, Technology, and Entertainment graduate student Monisha Ramachendran Selvaraj successfully defended her MFA thesis Dhayam VR, a 3D room-scale VR experience of the Indian board game played in the epic Mahabharata, with integrated narrative of the classic tale:

Fall 2019 Monisha Selvaraj created preproduction designs and developed her 3D assets and Unity game engine prototype for the VR game:

________________________________________________________________

MRder Mystery Theater Game Magic Leap Research Project

Spring 2020 MTEn MFA students and undergraduate students in my cross-listed Immersive XR Design course worked on developing a MRder Mystery Theatre app for the Magic Leap headset. This project applies spatial computing to a classic murder mystery improv theater narrative, with competitive and performative components. MFA student Jeffrey Kesselman developed a test that places a dead body, blood stains, and a knife in the real environment as seen through a Magic Leap headset:

________________________________________________________________

Video Game Studies Research

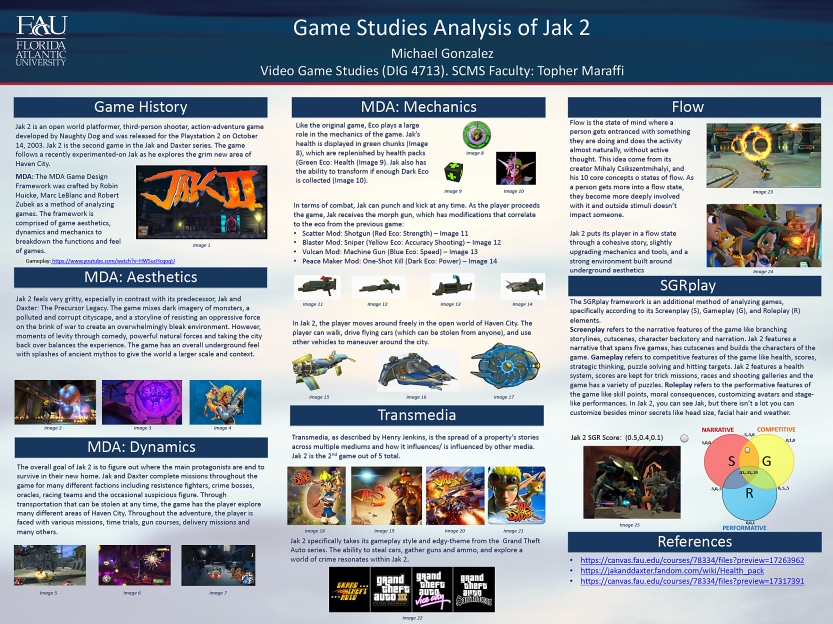

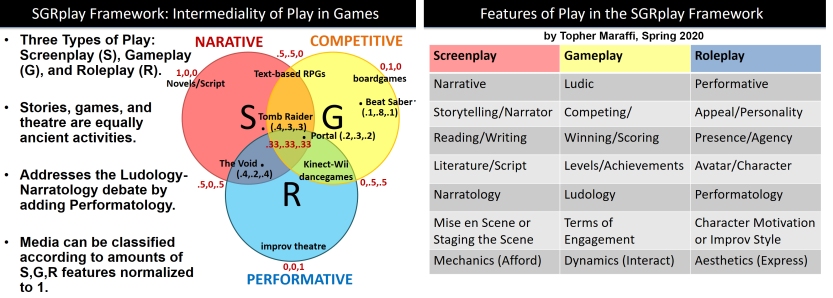

Spring 2020 I developed a new research analysis tool for the intermediality of play in games called the SGRplay (pronounced sugar+play) Framework, and taught it for game analysis in my undergrad Video Game Studies course, and for game design in my graduate XR Immersive Design course. SGR stands for three fundamental types of play (Screenplay, Gameplay, and Roleplay), which corresponds to the narrative, competitive, and performative design components in an interactive media experience.

My game studies students used this framework with other popular design frameworks like the MDA (Mechanics, Dynamics, and Aesthetics) and Flow concept to do a formal analysis of games they played. Here are a sample of game analysis research posters from the course:

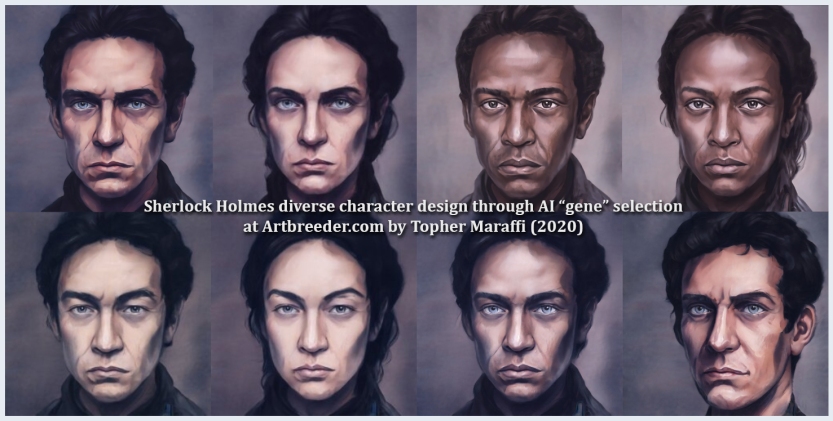

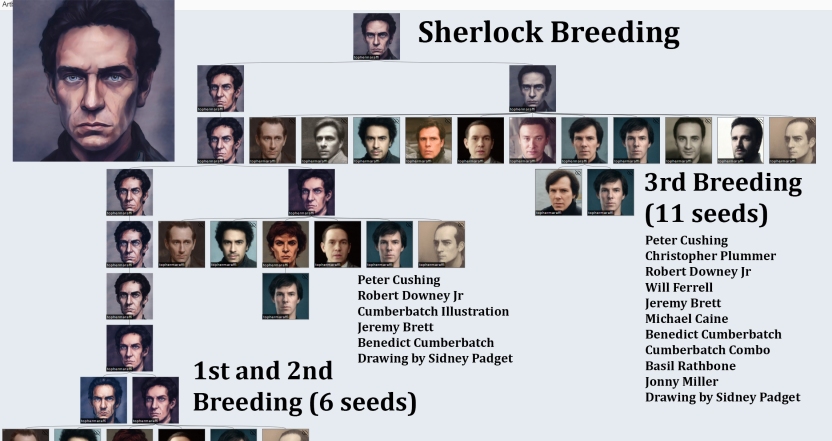

Fall 2020 I began researching how to use AI tools for designing more diverse game characters, using transmedia representations of iconic literary characters that are in the public domain such as Sherlock Holmes and Frankenstein. Using source imagery from popular media as inputs, I began using GANs and neuro-evolutionary AI breeding tools to develop diverse representations that have features from the canon of media portrayals:

________________________________________________________________

Funded Driving Game Research Project

Spring 2019 We received a Dorothy F. Schmidt College of Arts and Letters seed grant to begin working on an interdisciplinary project to develop a driving game simulation using the Magic Leap One headset that will be used to inform autonomous vehicle design (in collaboration with Computer Engineering faculty Dani Raviv, Hari Kalva, and Aleks Stevanovic). Our game simulation will track what highly ranked drivers do with their eyes and bodies to successfully maneuver a vehicle in an urban environment. MTEn MFA students Alberto Alvarez and Brandon Martinez will be developing this project throughout the 2019-2020 academic year. Here is a video of a smaller VR car project they created for my spring 2019 Interactive Interface Design course:

________________________________________________________________

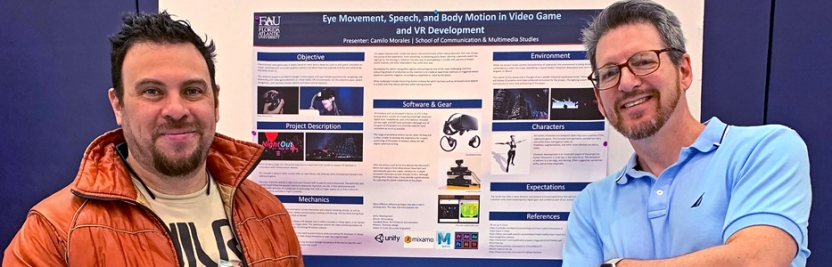

FAU 2019 Graduate Research Day MFA Posters

MFA students in my Interactive Interface Design (DIG6605) course presented research posters at the 10th annual FAU Graduate and Professional Student Association (GPSA) Research Day. Pictured are Camilo Morales (above), clockwise: Brandon Martinez and Alberto Alvarez; Raven Doyle; Monisha Selvaraj; Alex Henry; Raven, Monisha, and John Horvath:

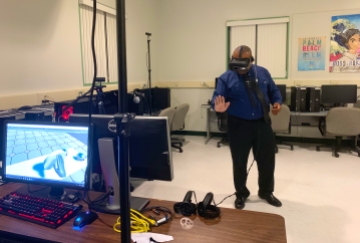

2019 MTEn-MFA Lab Open House

Spring 2019 We had an open house in our MTEn-MFA lab on the Davie campus for potential students where we demo’d our Magic Leap, Oculus Rift, HTC Vive, and Noitom Perception Neuron mocap technology:

________________________________________________________________

Advanced Animation and Performance Capture

Spring 2019-2020 My Advanced 3D Computer Animation (DIG3306C) students created animation sequences from motion capture data in online libraries and custom movements we captured with the Noitom Perception Neuron suit. Here is a selection of their work for Spring 2020, followed by Spring 2019:

________________________________________________________________

3D Video Game Design Projects

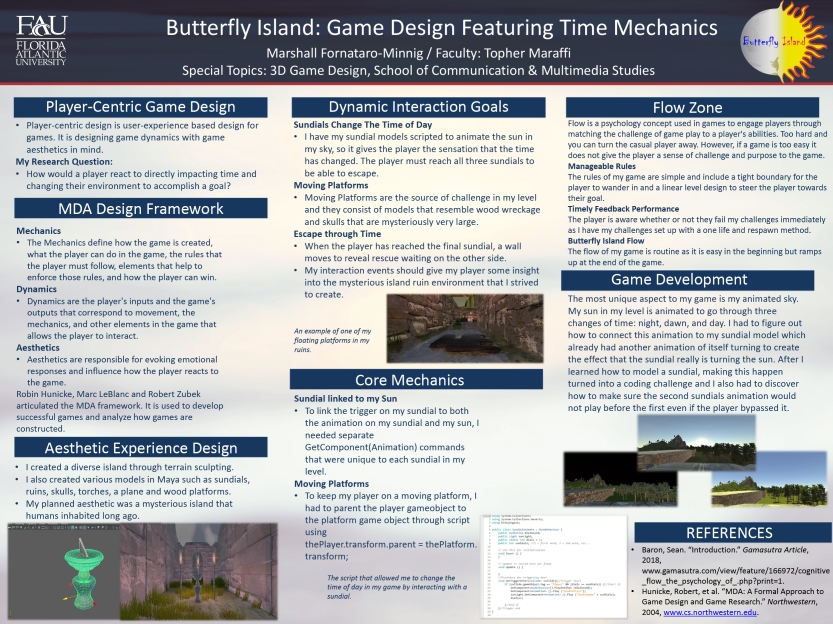

Fall 2020 Students in my cross-listed 3D Video Game Design (DIG3725C/6547) courses used Unity game engine, Autodesk Maya, Adobe Mixamo, and C# coding to develop 3D video games using the MDA (Mechanics, Dynamics, Aesthetics) game design framework, as detailed in their research posters. Here is a selection of student game demos and posters:

Students in my previous 3D Video Game Design course used Unity game engine and Autodesk Maya to create seek-and-find 3D island adventure games:

Students also created research posters, and Marshall Fornataro-Minnig showed a demo of his game at FAU’s Undergraduate Research Day:

________________________________________________________________

2018 Visual Design & Pre-production Projects

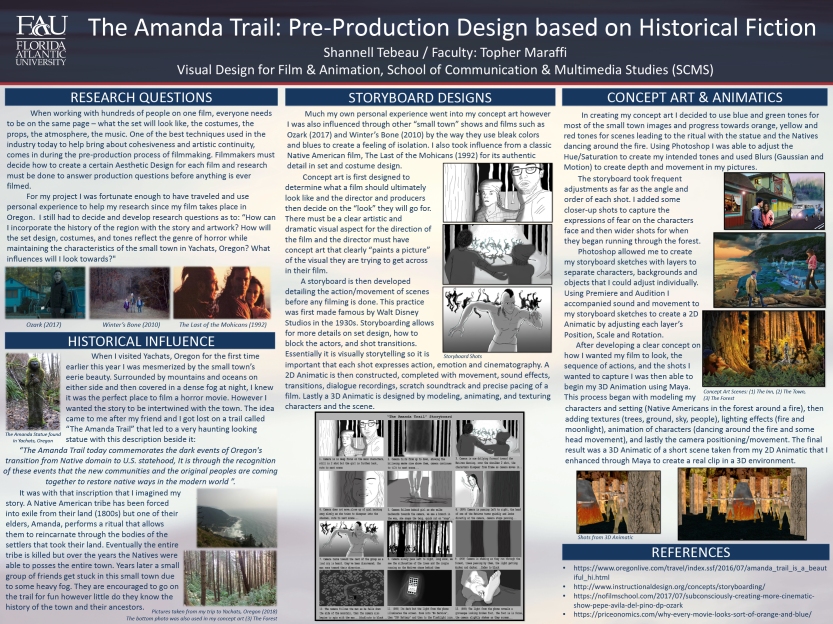

Fall 2018 My Visual Design for Film, Animation, and Games undergrad course is cross-listed with my Preproduction, Prototyping and Previsualization grad course in the fall semester. In this course students go through the entire preproduction pipeline, including planning a media concept, designing aesthetics, painting concept art, drawing storyboards, and creating animatics. Here are samples of student concept art:

DIG4703 student Shannell Tebeau presented a research poster of her history inspired pre-production work at FAU’s Undergraduate Research Day (click poster for details):

Students use a tablet and stylus to digitally visualize their narrative by drawing a storyboard of their scenes:

Categories: Uncategorized