NCSU College of Design faculty in Media Arts, Design and Technology (MADTech) are collaborating with Historic Mitchelville Freedom Park (HMFP) to develop the NEH Digital Project for the Public and Epic Games Megagrant funded research project A MAGIC Tour of Mitchelville’s Ghosted Structures: Memories of the Elders and Echoes of the First Gullah Town (formerly Mitchelville XR Tour). Mitchelville was the first self-governing town of formerly enslaved Gullah-Geechee people in the eastern United States during the Civil War (founded late 1862), a central feature of the Port Royal Experiment (1862-1865) in African American self-reliance during the war and into the Reconstruction era (1863-1877), and now a heritage site and park on Hilton Head Island, South Carolina. A diverse team of digital media faculty and humanities experts from the UNC system, Clemson University, and University of South Carolina Beaufort, will collaborate with local leaders and preservationists from the greater Gullah-Geechee community in Beaufort County.

We are developing college-level curriculum for designing humanities exhibits using emerging technologies related to HMFP, with prototypes designed to enhance “ghosted structures” installed on an interpretive trail in the park, and a summer school curriculum to enhance HMFP’s year-round Modeling our Ancestors to Generate Influence and Change (MAGIC) program in their new classroom-lab (J on HMFP Master Plan), initially funded by the Mellon Foundation and the State of South Carolina. Here is our latest narrative and design documents for the project that we submitted to the NEH Digital Projects for the Public Prototyping-level grant. This project continues to be developed in the 2024-2025 academic year through a funded academic course design agreement ($25K ACDA) with HMFP, where NC State MADTech students are creating interpretive scenes and prototype tests of Metahuman representations of Gullah-Geechee elders and interactive simulations of the park in our Virtual Production Lab. In spring 2025, we are piloting a 6-credit Global Experience studio where MADTech and Design Studies seniors will learn about Gullah-Geechee culture by working on the project and visiting HMFP over spring break:

Fall 2025: Coming Soon! Mitchelville Fortnite Experience

We are developing a Mitchelville educational experience in Fortnite featuring MetaHuman digital doubles of Gullah-Geechee community leaders, scholars, and elders telling the story of the heritage site using Unreal Editor for Fortnite (UEFN).

Spring Break 2025: ADN460 Mitchelville Research Trip

In March I took ten students and two faculty to Beaufort County to gather spatial data at Historic Mitchelville Freedom Park (HMFP) on Hilton Head Island. The students received Global Experience credits by learning about Gullah-Geechee culture through Gullah Heritage tours on Hilton Head and a Reconstruction Era National Historical Park tour in Beaufort. We photogrammetry scanned and performance captured the Director of HMFP Ahmad Ward, award winning performer Marlena Smalls (left), and Gullah Days author Carolyn Grant (right):

As part of their final deliverables, students designed individual research posters about their experience…

Students first learned how to create and animate MetaHumans of themselves using photogrammetry and performance capture in Unreal Engine 5.4 before working on data recorded at Mitchelville:

Here are some places we visited in Beaufort County over Spring Break…

NCSU 2024 Ghosted Structures Interpretive Scene Test with Hyper-Real Metahumans:

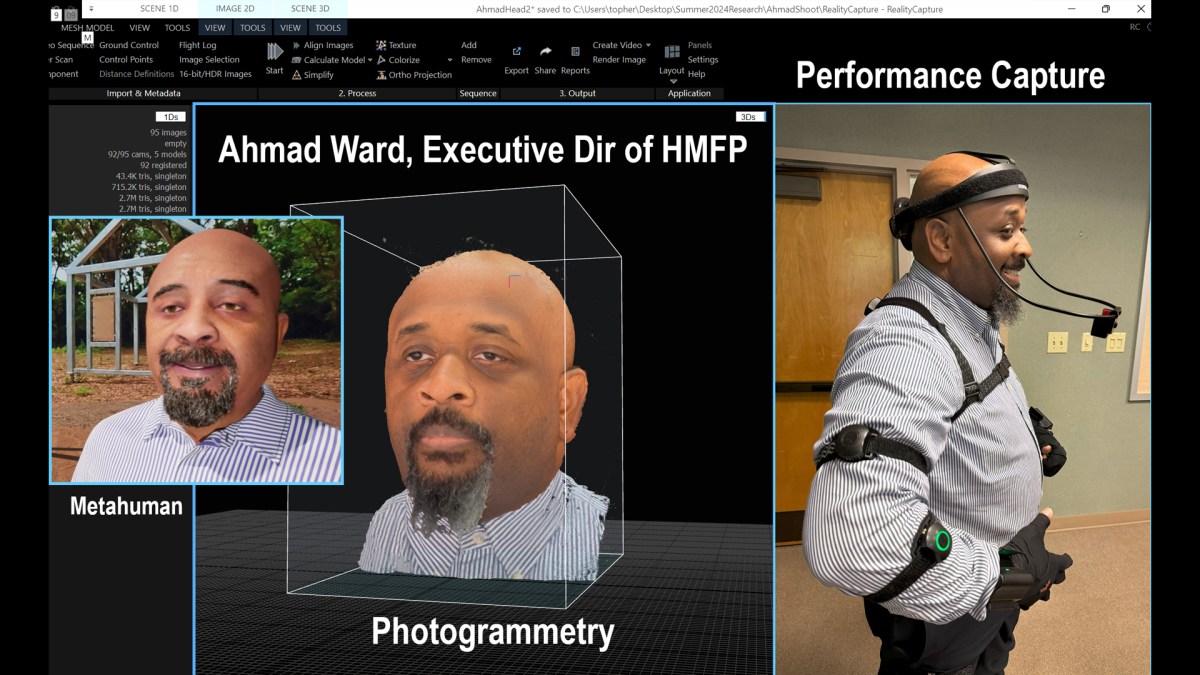

NCSU Summer 2024 We processed photogrammetry and performance capture data of Ahmad Ward, the Executive Director of Mitchelville and two Gullah-Geechee elders (Mr. Morris Campbell and Mrs. Mary Green) to create a 360 scene of the Ghosted Structures featuring Metahuman doubles. Here is our first scene test done over Maymester:

I travelled to Hilton Head Island at the end of the spring semester to volumetrically scan and performance capture Ahmad Ward using our Noitom inertial suit and a Rokoko headrig with my iPhone running Epic’s Livelink Face app. We processed the data in Reality Capture and Metahuman Creator/Animator software in Unreal Engine 5.4:

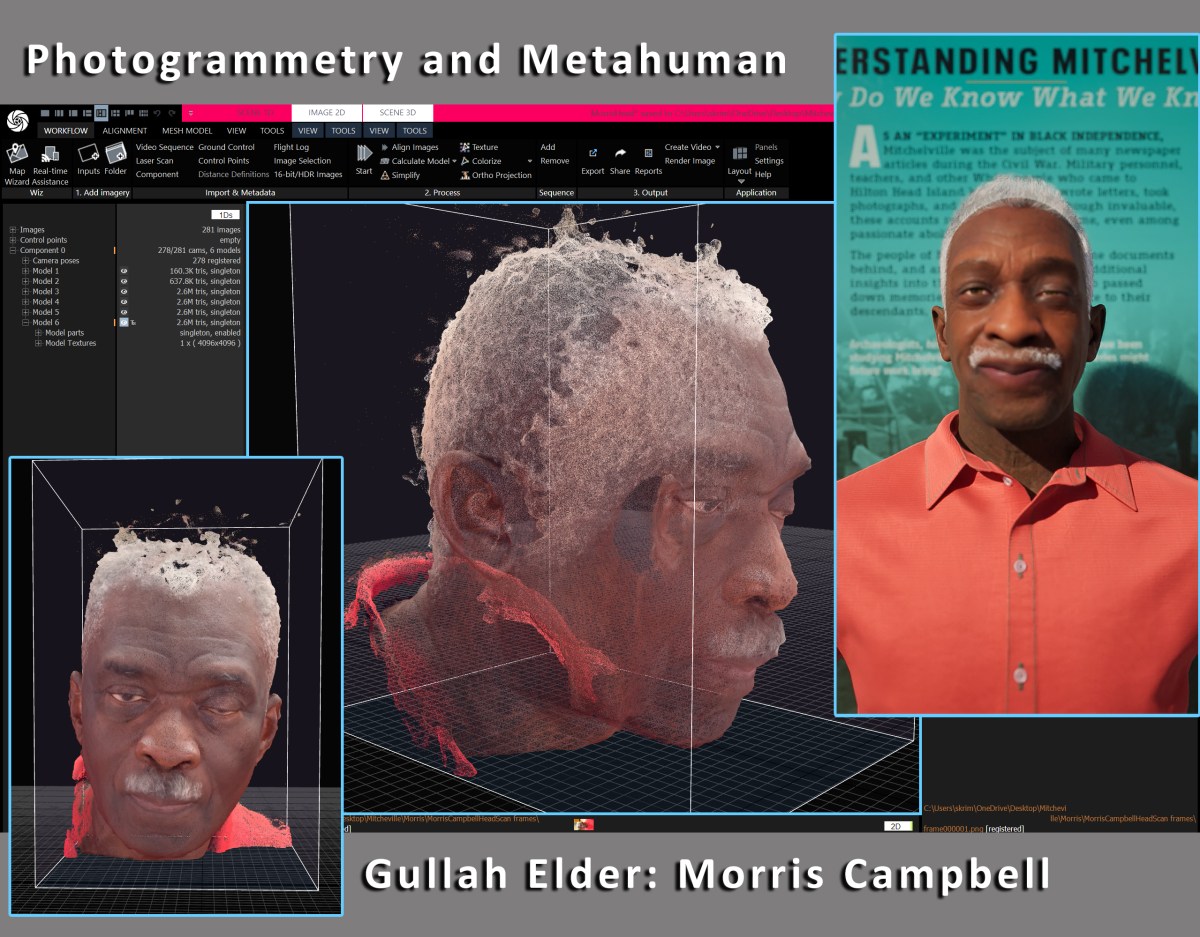

I also scanned and interviewed two Gullah-Geechee elders with a series of questions about their memories of life as an islander and what Mitchelville means to them. MADTech undergraduate students Maleah Seaman (Ahmad Metahuman) and Niyana Haney (Mary Metahuman), along with MAD graduate TA Rebecca Pyun (Morris Metahuman), worked on processing the data over Maymester:

I also scanned and interviewed two Gullah-Geechee elders with a series of questions about their memories of life as an islander and what Mitchelville means to them. MADTech undergraduate students Maleah Seaman (Ahmad Metahuman) and Niyana Haney (Mary Metahuman), along with MAD graduate TA Rebecca Pyun (Morris Metahuman), worked on processing the data over Maymester:

We processed short excerpts in Metahuman Animator software of Morris Campbell and Mary Green’s interviews. Later we will include the majority of the interviews in the windows and doors of our ghosted structures interpretive scene:

We will also be testing our interpretive scenes on the LED wall in our Virtual Production Studio. Our goal in the prototyping phase is to develop the curriculum and examples for several hyper-real interpretive scenes at HMFP, and then deploy those scenes to a variety of platforms and interfaces. An interactive LED wall interface using AI-mocap and Unreal Engine is just one platform we will explore. Others will be AR experiences in the park and 360 video or VR experiences online:

A big thanks to Mr. Ward, Mr. Campbell, and Mrs. Green for trusting us with representing them in this new form of documentary storytelling! MADTech team in our Virtual Production Studio from left to right: Rebecca Pyun, Maleah Seaman, Niyana Haney, and Topher Maraffi.

Research poster created for the 2024 NCSU Undergraduate Research Symposium:

NCSU 2023 Generative AI Designs of Hyper-real Metahumans for Reconstruction Live! and TITAH XR Exhibits:

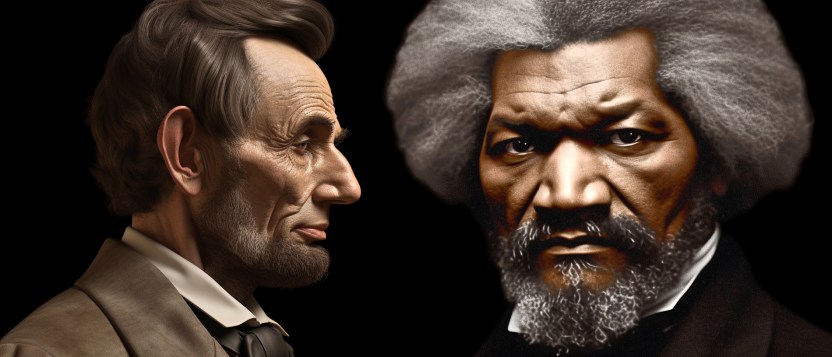

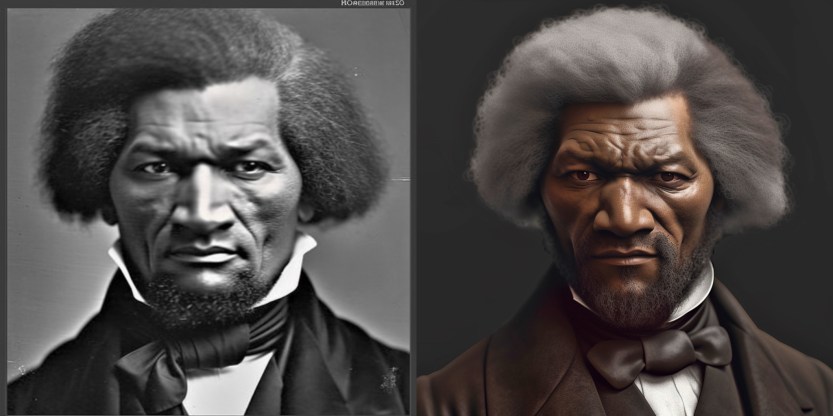

NCSU Summer 2023 I used startup funds to hire MADTech undergraduate student AJ Lawrence as a research assistant to help me develop new hyper-real Metahuman figure designs for Reconstruction Live! Tabletop AR Theatre Play for the Tilt 5 augmented reality platform. The initial prototype will simulate a stage scene depicting when Frederick Douglass met Abraham Lincoln in 1863 to discuss equal pay and treatment for Black Union soldiers, using Douglass’ accounts to develop dialogue.

We are using Midjourney generative AI to visualize full color hyper-real models of Douglass and Lincoln:

Reconstruction Live! is designed to have the aesthetics of a live stage show or puppet theatre, drawing from Walt Disney’s animatronic shows like Great Moments with Mr. Lincoln and historical impersonation shows like Hal Holbrook’s Mark Twain Tonight!, but featuring narratives of Black heroes of the Reconstruction Era like Douglass:

Virtual production and performance capture will be used to animate Metahuman-quality historical figures that have a liveness and presence of real actors, and who break the fourth wall to look and speak directly to the player about history:

Our goal is to engage viewers through design frameworks from participatory theatre in order to educate on neglected emancipation histories that impact contemporary issues of race and Civil Rights, both at the park and in young adult classrooms.

Actors will be performance captured to simulate believable movements on hyper-real models and dialogue of iconic figures like Frederick Douglass will be adapted from his writings. We will also use generative AI to improvise new dialogue in Douglass’ style, so that the virtual figures can contextualize the scene for players:

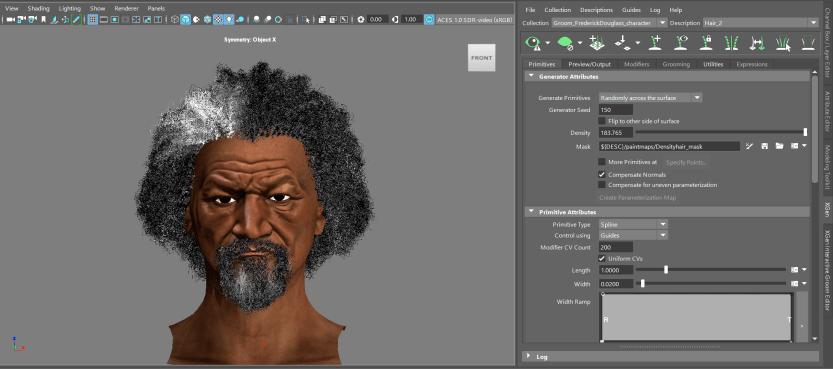

We are using Midjourney generated images as source material for 3D models and textures that can be refined into Metahuman-quality figures for animation in a game engine:

Reallusion software and AI Headshot plugin generates a 3D model from the Midjourney image:

We are exploring virtual production pipelines for editing Reallusion models in Autodesk Maya and Zbrush, and then sending them to Unreal Engine Metahuman Creator. Hair is developed in Maya Xgen to export as a groom to UE5:

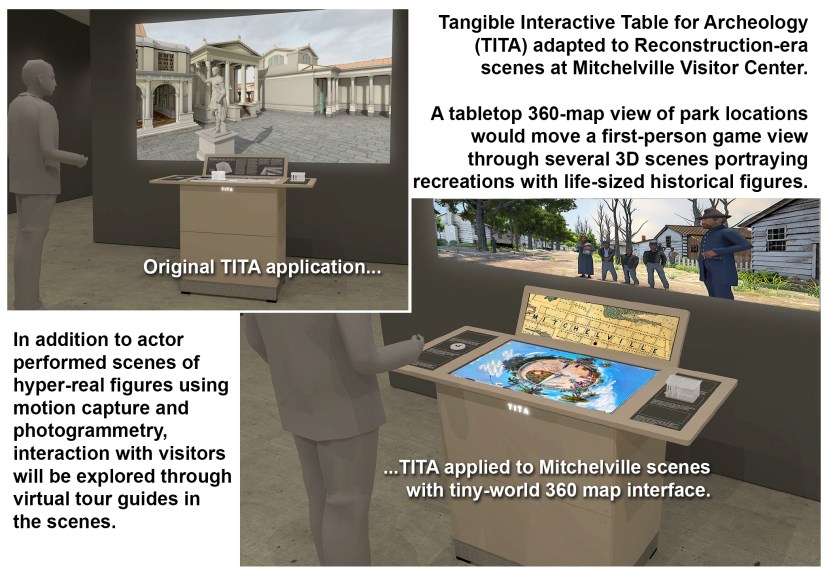

We have proposed adapting NCSU faculty Todd Berreth’s “TITA” platform for presenting our Mitchelville XR Tour scenes in an interior space with an Interactive Table for Archeology and Heritage (TITAH). This exhibit would be housed in a new classroom lab being built in Historic Mitchelville Freedom Park with Mellon Foundation funding:

FAU 2019-2020 Video Demo of Virtual Preproduction and Headset Tests:

FAU Spring 2020 we used historical photography taken by Henry P Moore in 1862 of Mitchelville inhabitants and houses to create a 3D simulation of the town in the Unity game engine:

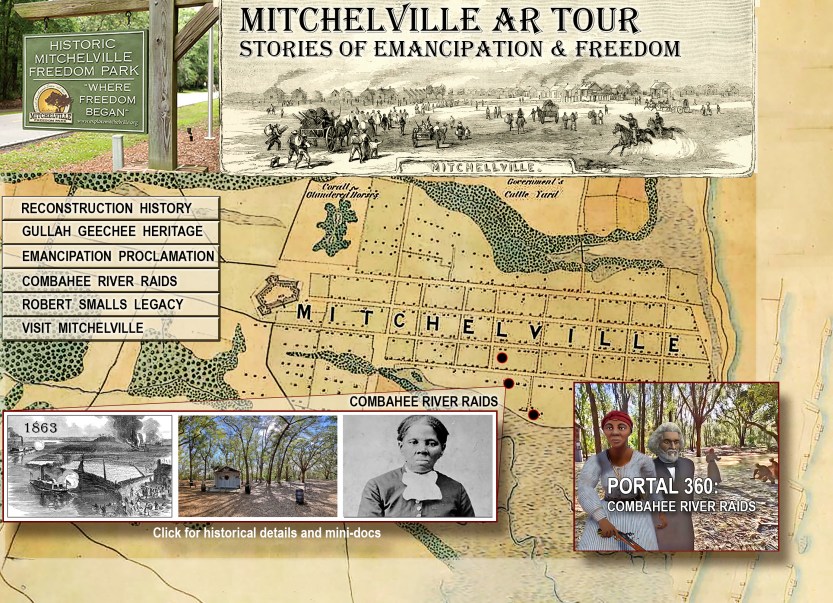

We initially designed a multimodal production pipeline to distribute 3D scenes developed in a game engine (Unity and/or Unreal) to multiple XR platforms, including augmented reality (AR- below left) mobile devices and mixed reality (MR – below middle) headsets in the park, a downloadable app for virtual reality (VR below right) headsets, and a web site portal to a 360 interactive web experience (below next image).

The designs initially included a web site that featured links to 360 renders of the AR prototype scenes, as well as additional educational content and mini-documentaries:

Here is a 360 web browser test of the Harriet Tubman AR scene set at the Prayer House in Mitchelville (left mouse click and drag to look around the 360 rendered scene):

- 360 Demo by FAU MFA student Ledis Molina. Music by Anita Singleton-Prather and the Gullah Kinfolk, recorded live at the 2018 Original Gullah Festival in Beaufort, SC.

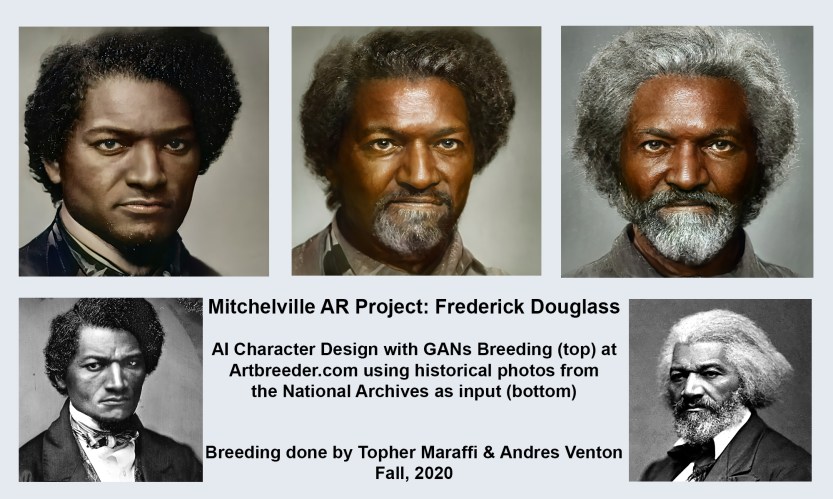

FAU Fall 2020 we started developing an AI 3D character design pipeline that uses Generative Adversarial Networks (GANs) and Neuro-evolutionary AI image “breeding” (a badly named technical term for image feature tuning) tools to design realistic versions of historical characters, by inputting source photographs from the National Archives. Here are some examples of developing full color images of Harriet Tubman and Frederick Douglass that were done using the Artbreeder web tool:

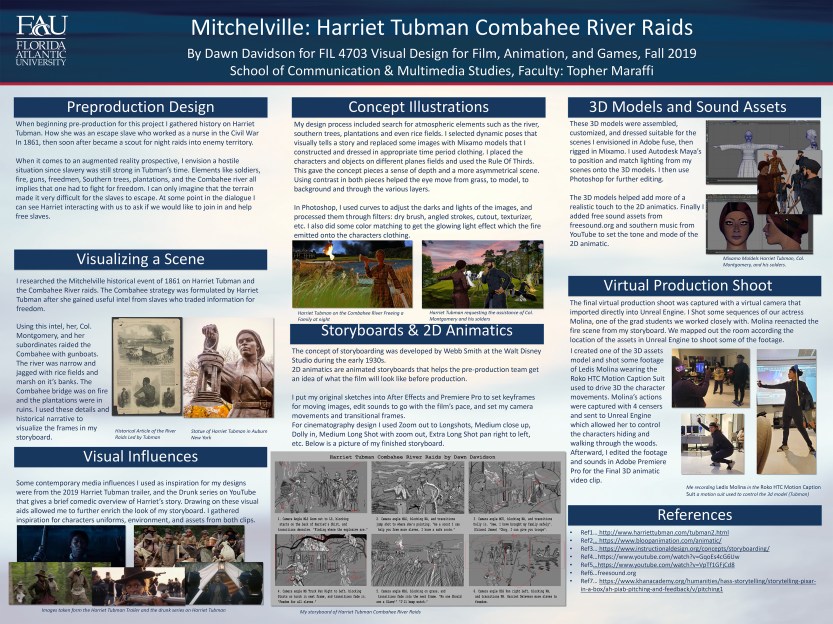

FAU Fall 2019 we started Mitchelville pre-production work in my Preproduction, Prototyping, and Previs (DIG 6546) MTEn grad course that is cross-listed with my undergrad Visual Design for Film, Animation, and Games (FIL 4703/DIG 4122C) course, with both grads and undergrads working together on visualizing several stories related to Mitchelville and Reconstruction in Beaufort County. Students created 3D models of historic personalities like Harriet Tubman, Robert Smalls, and Frederick Douglass, and contemporary Gullah-Geechee storytellers like Aunt Pearlie Sue as performed by Anita Singleton-Prather:

Harriet Tubman Combahee River Raids research poster by undergraduate Dawn Davidson:

We started virtual pre-production to create 3D cinematics for the Harriet Tubman Combahee River Raids storyboard using our Rokoko motion capture suit, HTC Vive base stations and trackers, and Unreal game engine. Graduate student Ledis Molina performed Harriet, assisted by graduate students Alberto Alvarez, Brandon Martinez, Camilo Morales, and undergraduate students Dawn Davidson, Mike Gonzalez, Juan Aragon, Tifffany Fedak, Alexandra Lusaka, Brandon Cela, and Robert Barclay:

Here is a render test in Unreal game engine of some shots edited by undergraduate student Tiffany Fedak, 3D model by graduate student Ledis Molina, virtual production by MFA students Alberto Alvarez, Brandon Martinez, and Camilo Morales:

Categories: Research, Uncategorized