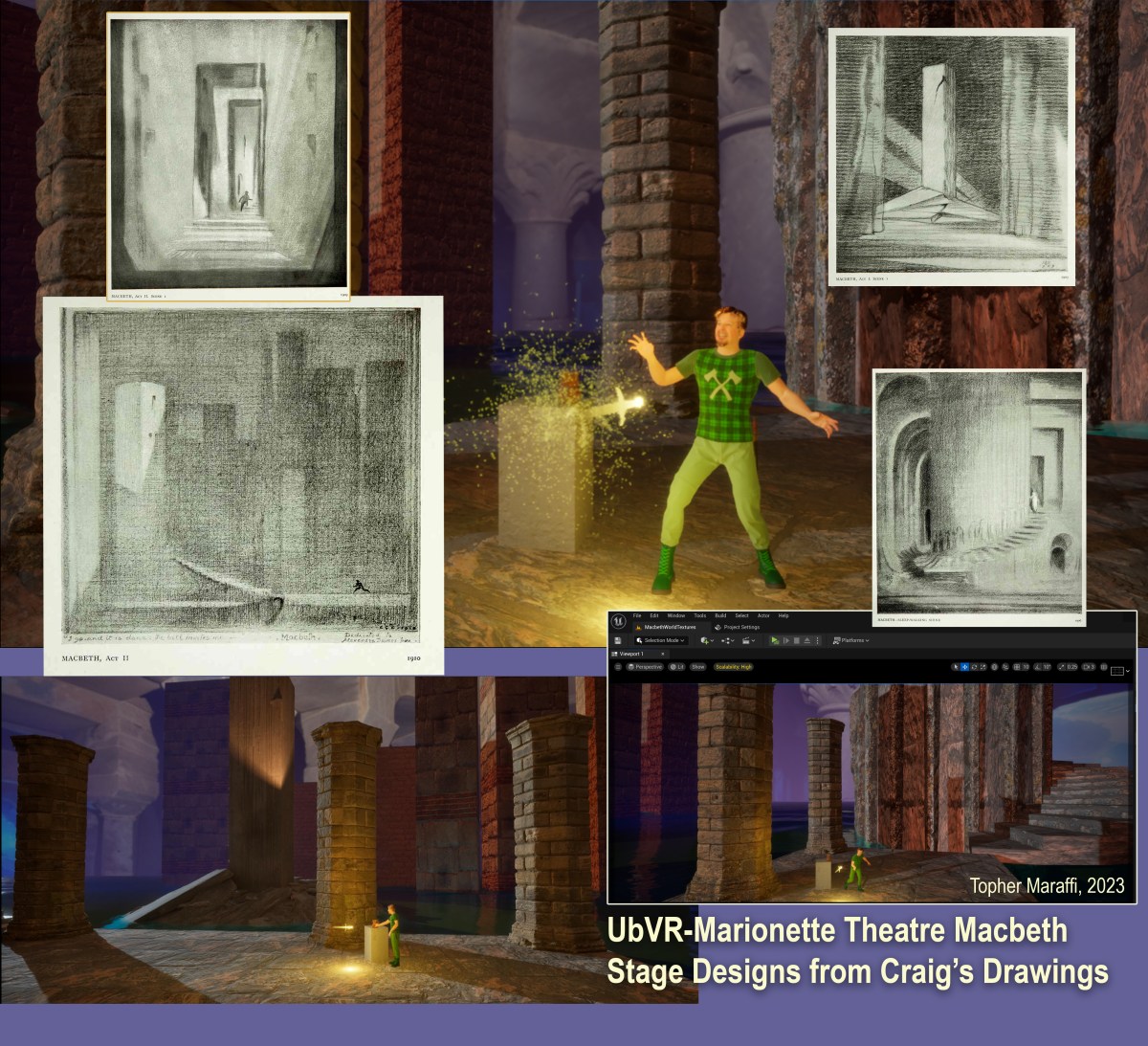

An exciting application for virtual production is where actors appear to perform with life-sized Metahumans in a large LED wall for a live theatre production. I’ve adapted Shakespeare’s opening witch’s scene in Macbeth (Act 1, Scene 1) using Edward Gordon Craig’s 1908 concept of an Uber-Marionette or super-puppet combined with the concept of hyper-reality as defined by Curtis Hickman’s book Hyper-Reality: The Art of Designing Impossible Experiences (2023). Hickman has guest lectured in my NCSU Media Arts, Design and Technology (MADTech) courses on multiple occasions, and my graduate students will use his book as a guide for developing this project in our College of Design Virtual Production Lab (Brooks Hall 320) this spring semester.

The goal of this work is using hyper-reality design principles to blur the separation between the virtual world in the screen and the live actor on stage, who casts a dynamic shadow into the Unreal Engine scene and seems to have expressive, improvised and supernatural interplay with the Metahuman witches. As Uber-Marionettes or super-puppets, the Metahuman doubles are being performed by the live actor through hidden trigger boxes in the virtual space that overlap the real volume, as are visual effects and lighting both in the real and virtual worlds. The entire virtual production system becomes a performative control mechanism to create the illusion of impossible interaction, which is the essence of hyper-reality design used by Hickman for the development of location-based VOID VR experiences. Here is a short demo video created for SIGGRAPH SOIREE 2025:

Research Question: Can an actor use motion capture to drive life-sized Metahuman reactions in a virtual production volume so that there appears to be expressive, improvised, and supernatural interplay between the real and virtual figures in a live performance?

This hyper-reality adaptation of the opening scene of Macbeth is meant to demonstrate some of the ways that a theatrical treatment of Metahuman interaction in a virtual production volume can create mixed or extended reality effects (MR/XR) for a live audience that don’t require expensive and distracting headsets. To treat Metahumans as performing objects with the criteria of Craig’s super-puppet design requires real-time blending of refined performance capture data that we are blending performance capture animation montages and visual effects sequences in real-time by coding state machine changes in Blueprints. To create the illusion of liveness we are building improvisation into the actor affordances for triggering state changes as shown in this video for SIGGRAPH 2025 Spatial Storytelling program:

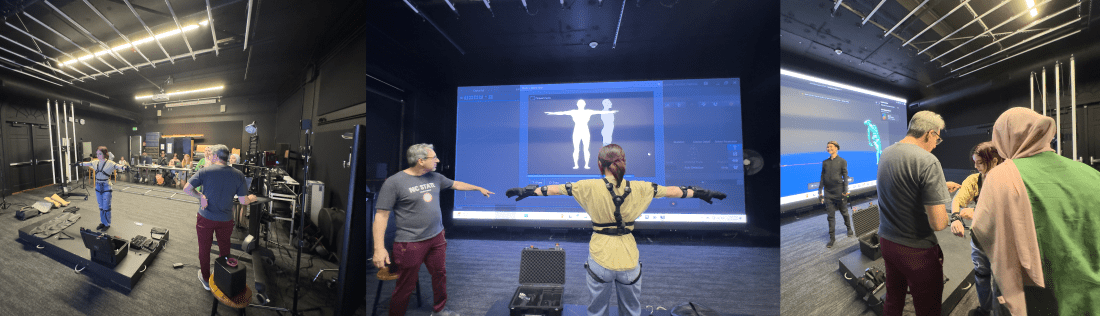

In the prototyping phase of the project, we will collaborate with Shakespearean actors to be photogrammetry scanned using Reality Capture to create 3D digital doubles that will be processed into fully costumed Metahuman witches. Then we will performance capture an array of expressive actor body and facial variations for each witch using our Rokoko suit, head rig, and Metahuman Animator. To real-time puppeteer the Metahuman witches for an audience, a live Shakespearean actor wearing an inertial mocap suit under their costume will play the Metahumans like a musical instrument by striking spatially designed trigger boxes through a Livelink connection that controls a hidden Metahuman in the scene.

The Metahuman puppetry illusions will be enhanced by using virtual lights and VFX to drive DMX image-based lighting and practical effects in the virtual production volume, that will further blur the aesthetic separation between the real stage and the Unreal Engine scene in the LED wall.

Macbeth Metahuman: Performing Craig’s Uber-Marionette in Hyper-Reality by Topher Maraffi, NCSU MADTech

Extended abstract presented at Journées d’Informatique Théâtrale Conference, 3rd Edition, Avignon France October 9th 2024:

Download the conference paper from HAL Open Science (07/01/2025)...

Download my JIT2024 presentation slides…

Download the hyper-reality script adaptation…

Recent strikes by the SAG-AFTRA labor union over fears that Hollywood studios will replace actors with AI-generated doubles (Yandoli 2023, Scherer 2024) echo similar fears more than a century earlier when Edward Gordon Craig proposed replacing live theatre actors with an “Uber-Marionette” (Craig 1908). Craig’s futuristic vision for a designed theatre of impossibly scaled sets and super-puppets performing with uncanny liveness required technological and artistic advancements not possible in his lifetime (Innes 1998, Esposti 2015). His Shakespeare set designs were considered too vast to be practical and his controversial Uber-Marionette concept has since faded to merely a metaphor for the perfect stage actor (Leabhart 2017, Le Boeouf 2010). But data and AI-driven emerging technologies are bringing Craig’s impossible vision into focus as a perceptual reality, specifically hyper-reality as defined by Curtis Hickman, the magician behind numerous location-based VR experiences at The VOID theaters. Hickman’s definition of hyper-reality is platform agnostic: “The practical illusion of an impossible reality so convincing that the mind accepts it as reality itself” (Hickman 2023). He applied this concept using stage magic principles to develop critically acclaimed immersive VR experiences, including Disney’s Star Wars: Secrets of the Empire (2017) and Sony’s Ghostbusters: Dimensions (2016). Craig wrote about magic as the foundation of stage craft (Craig 1921) and often framed his scenic and puppetry designs as spectacles to create a super-natural or magical aesthetic, such as his ideal design of the opening witch’s scene in Macbeth that sets the tone for the entire play (Le Boeuf 2017). Stage magic drove an aesthetics of the impossible throughout the history of immersive media (North 2008), from Méliès invention of the first visual effects in cinema to Hickman’s misdirected walking in VR to give the illusion of a performance space that is impossibly larger than the physical theater (Maraffi 2021).

The Hyper-Reality Theatre Project applies Craig’s puppet and masked theatre design concepts to world-building and intelligent character interaction in hyper-reality. The goal of this work is to explore the aesthetics of Metahumans as dynamic performing objects in a live experience, and to use real-time motion capture to allow actors to interact with intelligent super-puppets that create the illusion of presence and liveness. Craig’s concept for an Uber-Marionette will be used for examining hyper-reality experiences that feature digital doubles, such as virtual concerts like Abba’s Voyage (2022) and Sakamoto’s Kagami (2023), or themed experiences like Universal’s Bourne Stuntacular (2020) that feature computer-controlled sets and dynamic screens to blend real with virtual performers. I will also discuss how we are adapting Craig’s Shakespeare designs and unpublished opening scene of Macbeth into a hyper-reality experience using Metahuman super-puppets performing with live actors through our LED virtual production wall. I argue that not only is Craig’s Uber-Marionette concept being realized in hyper-reality experiences, but his and other theatrical design frameworks (Maraffi 2022) are critical to the development of digital doubles as performing objects and will ultimately be beneficial to actors as a new performance medium.

- Craig, Edward Gordon (1908) The Actor and The Über-Marionette. The Mask Periodical, Vol 1 No 2, Florence: Self-published.

- Craig, Edward Gordon (1983) Craig On Theatre: On Learning Magic. Pg 98, Internet Archive.

- Degli Esposti, P. (2015). The Fire of Demons and the Steam of Mortality: Edward Gordon Craig and the Ideal Performer. Theatre Survey, 56(1), 4-27.

- Hickman, C. (2023). Hyper-reality: The art of designing impossible experiences. Independently published on Amazon.

- Innes, Christopher (1998) Edward Gordon Craig: A Vision of the Theatre. London: Routledge.

- Leabhart, Thomas and Leabhart, Sally (2017) “Edward Gordon Craig’s Übermarionette And Étienne Decroux’s “Actor Made Of Wood”,” Mime Journal: Vol. 26, Article 6. DOI: 10.5642/ mimejournal.20172601.06

- Le Boeouf, Patrick (2010) On the Nature of Edward Gordon Craig’s Über-Marionette. New Theatre Quarterly 26(2): 102-114.

- Le Boeuf, P. (2017). Two Unknown Essays By Craig On The Production Of Shakespeare’s Plays. Mime Journal, 26(1), 123-134.

- Maraffi, C.(2021). Stage Magic as a Performative Design Principle for VR Storytelling. Cinergie–Il Cinema E Le Altre Arti, 10(19), 93-104.

- Maraffi, C. (2022) VR Storytelling for Social Justice and the Ethics of Playing Black Bodies. Chapter 2 in The Changing Face of VR: Pushing the boundaries of experience across multiple industries, Vernon Press Wilmington.

- North, Dan (2008). Performing Illusions: Cinema, Special Effects, and the Virtual Actor. London – New York: Wallflower Press.

- Scherer, M., (2024) The SAG-AFTRA Strike is Over, But the AI Fight in Hollywood is Just Beginning. AI Policy & Governance, Privacy & Data, Jan 24, Center for Democracy and Technology.

- Yandoli, K., and Richardson, K., (2023) ‘An Existential Threat’: Actors Troubled by SAG’s AI Deal With Hollywood. Rolling Stone Magazine, Nov 17th.

Early Designs (Fall 2023 – Spring 2024)

Here is an early test of using a mocap suit to hit hidden triggers in the Unreal Engine scene displayed in a smaller version of our LED wall. In this case the Metahuman avatar is visible, and the choreography is reversed for the actor. I performed this Halloween “Thriller” scene for visitors at our fall open house in October 2023:

My initial Macbeth Metahuman work shows a trigger box test of a small section of the opening scene in Macbeth (Act 1, Scene 1), which features the entrance of three witches who represent the Greek Fates and the elements of thunder, lightning and rain. The live “Rain” witch is puppeteering the other two Metahuman witches through a Livelink connection to an Unreal Engine scene in our Virtual Production Lab’s LED wall (Oct 2024). In this clip you can see me working out how to perform the mocap suit in order to hit each UE5.4 trigger box. My semi-improvised performance dramatically moves the scene forward by revealing each witch and their associated VFX, dialogue, and elemental sounds:

Categories: Uncategorized